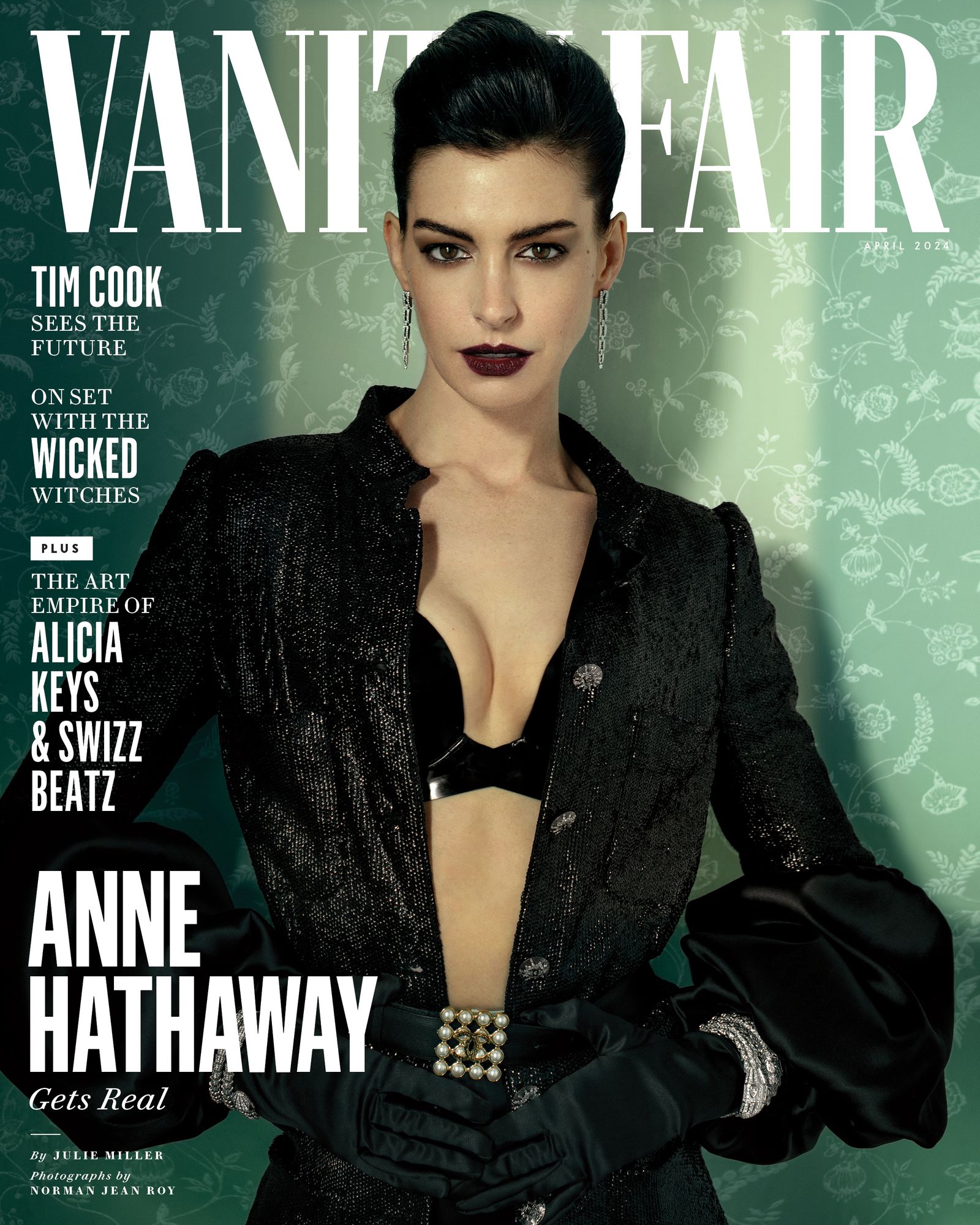

Deepfakes—the use of AI to craft hyper-realistic manipulated videos or images—are no longer limited to sci-fi movies. Today, they're mainstream, and celebrities like Anne Hathaway have become prime targets for this digital trickery. For social media users and privacy-conscious adults everywhere, spotting and avoiding deepfake scams is a crucial 21st-century skill. This guide explores how to identify deepfakes featuring celebrities, the latest scams making headlines, and practical steps to protect yourself from AI-driven misinformation.

Why Celebrity Deepfakes Are a Growing Concern

The rise of deepfake technology has opened the floodgates to a new era of digital deception. Celebrities, due to their fame and vast online presence, are frequent victims. Not only can deepfakes harm their reputation, but they also trick fans and the general public into believing—and sometimes sharing—fake news, controversial clips, or even explicit content. Anne Hathaway’s recent experiences with AI-generated hoaxes highlight the seriousness of this issue.

Case Study: The Viral Anne Hathaway '6.9 Joke' Deepfake

A viral video circulated claiming to show Anne Hathaway making a crude joke during a 'Daily Show' appearance with Jon Stewart. The clip looked genuine—until fact-checkers revealed it was a deepfake. Hathaway never made the remark; the clip was manipulated to put words in her mouth, blending real video with fake audio and visuals. This incident is a textbook example of how easily misinformation can spread, even among sharp-eyed fans.

Curious about the facts? The viral Anne Hathaway video was debunked—here’s the full story: Is the Anne Hathaway 6.9 joke real?

- Deepfakes can convincingly alter what a person appears to say or do

- Viral sharing increases the risk of widespread misinformation

- Fact-checking is critical before reacting or sharing

How to Spot a Deepfake: Warning Signs and Red Flags

It’s getting harder to spot a fake, but most deepfakes still have telltale flaws. By staying alert and learning what to look for, you can avoid being fooled. Here are practical tips for identifying deepfake content:

- Uncanny Facial Movements: Watch for unnatural blinking, lip-sync issues, or stiff expressions.

- Audio Mismatch: If the audio sounds off or doesn’t match the mouth movements, be skeptical.

- Odd Lighting and Skin Texture: Deepfakes often struggle with complex lighting, leaving faces looking waxy or inconsistent.

- Context Clues: If a celebrity suddenly appears in a bizarre or out-of-character situation, pause and verify.

- Reverse Image Search: Use tools like Google Images or TinEye to check if a video or image has been altered or sourced from elsewhere.

"If it feels too outrageous, too salacious, or too perfectly viral to be true—fact-check first. That moment of skepticism can save you a world of embarrassment."

Recent Scams: AI Chatbots and Explicit Deepfake Content

Beyond viral videos, scammers are using AI to create fake chatbots that impersonate celebrities—sometimes in intimate or flirty conversations. In 2025, reports revealed Meta platforms hosted bots claiming to be Anne Hathaway, Scarlett Johansson, and others, sometimes sharing explicit images and insisting authenticity. These bots were not only misleading but also violated the celebrities’ consent and privacy.

Learn about the scandal where flirty chatbots created, shared on Facebook, Instagram, WhatsApp were exposed for impersonating celebrities.

| Type of Deepfake Scam | Notable Example |

|---|---|

| Viral Video Hoaxes | Anne Hathaway '6.9 joke' clip |

| AI Chatbots | Meta's flirty Anne Hathaway bots |

| Explicit Deepfake Images | Non-consensual celebrity images shared online |

What to Do If You Encounter or Suspect a Deepfake

Don’t panic if you suspect you’ve come across a deepfake. There are clear steps you can take to verify, report, and protect yourself and others:

- Stop and Investigate: Pause before believing or sharing sensational content.

- Fact-Check: Look for trustworthy news sources or fact-checking sites that have reviewed the video or image.

- Report It: Use platform tools to flag deepfake or impersonation content for removal.

- Warn Friends: Let others know if a viral video is fake, especially in group chats or social media circles.

- Strengthen Privacy: Be mindful of how much personal info you share online—scammers can use your images, too.

Why Legal and Platform Responses Matter

Platforms like Meta have begun removing deepfake content when exposed, but policy and enforcement often lag behind technology. Legal recourse is also evolving, with more pressure to create better protections for victims. It’s a positive step, but not a perfect solution—your own vigilance remains your best defense.

Read how major platforms responded when Meta created flirty chatbots of Taylor Swift, Anne Hathaway, other celebs without consent.

Practical Tips to Protect Yourself from Deepfake Scams

- Verify before you share: Double-check videos or images that seem shocking or out-of-character.

- Educate friends and family: Spread awareness about deepfakes, especially with those less tech-savvy.

- Use trusted sources: For celebrity news, check official accounts or reputable outlets.

- Strengthen your privacy: Limit what you share and who can view your content on social media.

- Leverage AI detection tools: New browser extensions and online services can help spot deepfakes.

Humor aside, if you see Anne Hathaway suddenly offering investment advice or making wild jokes you’d never expect, it’s probably not her! When in doubt, a healthy dose of skepticism is your best friend online.